[IBM's new AI chips are 100 times more powerful than GPUs, and new technologies can store and process weighted data in the same location] IBM's new chip design can accelerate the training of fully-connected neural networks by performing calculations at the data storage location. The researchers said that this "chip" can achieve 280 times the GPU's energy efficiency, and achieve 100 times the power in the same area. The research paper has been published in the Nature journal published last week.

The method of running a neural network with a GPU has brought about an amazing development in the field of artificial intelligence in recent years, but the combination of the two is actually not perfect. IBM researchers hope to design a new chip specifically for neural networks to make the former run faster and more efficiently.

It was not until the beginning of this century that researchers discovered that graphics processing units (GPUs) designed for video games could be used as hardware accelerators to run larger neural networks.

Because these chips can perform a large number of parallel operations without having to perform them sequentially like a conventional CPU. This is particularly useful for simultaneously calculating the weights of hundreds of neurons, and today's deep learning networks are composed of a large number of neurons.

Although the introduction of GPUs has allowed the field of artificial intelligence to achieve rapid development, these chips still need to be separated from processing and storage, which means that it takes a lot of time and effort to transfer data between the two. This has prompted people to begin researching new storage technologies that can store and process these weight data in the same place, thereby increasing speed and energy efficiency.

This new type of storage device stores data in analog form by adjusting its resistance level, ie it stores data on a continuous scale instead of binary 1 and 0 of digital memory. And because the information is stored in the conductance of the memory cell, calculations can be performed by simply passing the voltage through all the memory cells and letting the system through the physical method.

However, the inherent physical defects in these devices can lead to inconsistent behavior, which means that the current classification training accuracy achieved using this method to train the neural network is significantly lower than the calculation using the GPU.

In an earlier interview with SingularityHub, Stefano Ambrogio, an IBM Research postdoctoral researcher in charge of the project, said: “We can train on a system that is faster than GPU, but if the training operation is not accurate enough, then it is useless. So far, there is no evidence that Using these new devices is as accurate as using a GPU."

However, with the progress of research, new technologies have demonstrated their strength. In a paper published in the journal Nature last week (Equivalent-accuracyacceleratedneural-networktrainingusinganaloguememory), Ambrogio and his colleagues described how to create a chip using a new combination of analog memory and more traditional electronic components. The chip matches the accuracy of the GPU with faster operation and less power consumption.

The reason that these new storage technologies are difficult to train deep neural networks is that this process requires thousands of stimulations of the weight of each neuron up and down until the network is fully aligned. Ambrogio said that changing the resistance of these devices requires reconfiguring their atomic structures, which are different each time. The intensity of the stimulus is not always exactly the same, which leads to inaccurate adjustment of neuron weights.

The researchers created a "synaptic unit" to solve this problem. Each unit corresponds to a single neuron in the network, both long-term memory and short-term memory. Each cell is composed of a pair of phase change memory (PCM) cells and a combination of three transistors and one capacitor. The phase change memory cell stores weight data in its resistance, and the capacitor stores the weight data as charge.

PCM is a kind of "non-volatile memory", which means that even if there is no external power source, it retains the stored information, and the capacitor is "volatile", so it can only maintain its charge for a few milliseconds. But capacitors do not have the variability of PCM devices, so they can be programmed quickly and accurately.

When the neural network can perform classification tasks after picture training, only the capacitor weights are updated. After observing thousands of pictures, weights are transmitted to the PCM unit for long-term storage.

The variability of PCM means that the transfer of weighted data may still be erroneous, but because the unit is updated only occasionally, the system can again check the conductivity without adding much complexity. "If you train directly on the PCM unit, it is not feasible," Ambrogio said.

To test new devices, the researchers trained their neural networks in a series of popular image recognition benchmarks and achieved accuracy comparable to Google's neural network framework TensorFlow. But more importantly, they predict that the resulting chip can achieve 280 times the GPU's energy efficiency and achieve 100 times the same square millimeter area.

It is worth noting that researchers have not yet built a complete chip. When using the PCM unit for testing, other hardware components are simulated by the computer. Ambrogio said that researchers hope to check the feasibility of the program before spending a lot of effort on building a complete chip.

They use real PCM equipment because the simulation in this area is not reliable, and the simulation technology of other components has matured. Researchers are very confident in building a complete chip based on this design.

"It currently only competes with GPUs on fully connected neural networks where each neuron is connected to the corresponding neuron in the previous layer," Ambrogio said. "In practice, many neural networks are not fully connected, or only some of the layers are fully connected."

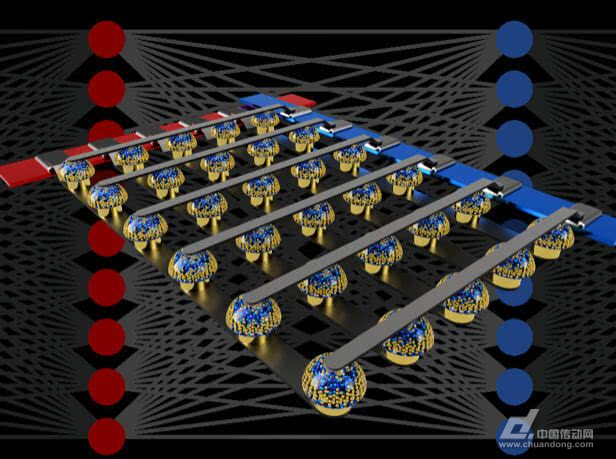

The crossbar switch nonvolatile memory array can accelerate the training of a fully connected neural network by performing calculations at the data locations.

Ambrogio believes that the final chip will be designed to work in tandem with the GPU to handle full-connection layer calculations while performing other tasks. He also believes that effective methods for dealing with fully-connected layers can be extended to other, broader areas.

What ideas can make this kind of special chip possible?

Ambrogio stated that there are two main directions of application: the introduction of AI into personal devices and the improvement of data center operations. The latter is the focus of technology giants - the cost of server operation for these companies has remained high.

Implementing artificial intelligence directly in personal devices can eliminate the privacy concerns caused by transmitting data to the cloud, but Ambrogio believes that its more attractive advantage lies in creating personalized AI.

"In the future, neural network applications can continue to learn experience in your mobile phone and self-driving car," he said. "Imagine: Your phone can talk to you, and can identify and personalize your voice; or your car can be personalized according to your driving habits."

Usb Switch And Socket,Usb Kvm Switch,Usb 3 Switch,Usb Socket

ZHEJIANG HUAYAN ELECTRIC CO.,LTD , https://www.huayanelectric.com